The cache can be a immediate rescue for your application OR a great working piece of your entire system. Below are the most popular layers that can be found in the web architecture. Depending on what kind of application you are working on, you can have more or less of them.

Cacheable layers

Frontend

There are several possibilities here. In-Memory is probably the easiest way to load and store data from an endpoint. If you use React or Vue, you certainly know what Redux and Pinia are, they are an enriched model of accessing data that is stored in the memory.

Next, we have two similar storages: localStorage and sessionStorage. The difference between them is that sessionStorage disappears when your browser session changes, and localStorage is permanently saved to disk. Both solutions are blocking (sync) and save the data as a key-value pair.

Another solution available directly from JavaScript is IndexDB. The most important difference between it and the previous ones is that access to it is asynchronous (non-blocking). It is designed to store significant amounts of structured data, including files and blobs.

Of course, you can find more examples on the MDN website, so I encourage you to familiarize yourself with all the possibilities of the above-mentioned options. There was (is) also something like WebSQL, but do not waste time on it, it does not support cross platform.

HTTP server

We also have several options at this point. The most popular servers are Apache and Nginx. They can also act as a reverse proxy - especially when you use k8s, they are usually load balancers.

Most of them have the option of caching content (e.g. expires in Nginx) or have entire modules (e.g. ngx_pagespeed) which helps you speed up response time. Usually, as developers we don't spend a lot of time on optimizing http servers - and rightly so as they are usually middleware throughout the life cycle of a request.

Runtime

Here's the good news again. Same like in previous paragraph, the optimization and caching usually take place out of the box. Most languages have built-in mechanisms that speed up the interpretation of the code (e.g. Python and bytecode, PHP and APC) or work in JIT mode (e.g. Java).

Backend / nth layer

Well, it all depends on the architecture of your application. For example, if you created an application based on the popular MVC model, you probably have at least three layers. It means you can do caching for each layer like storing complex Model (Aggregates) in Redis, cache Views or use full page cache in Controllers.

If you got something more complex e.g. CQRS with a DDD concept, you probably have more sub layers like queries, repositories, services, etc. in each of them you can also use cache mechanisms.

Additionally, some frameworks, such as Laravel, provide built-in commands to cache your views, routes, and even the entire config of your application.

Database

In my opinion, this is the least appreciated layer in terms of performance. Typically DevOps teams try to optimize routes at the revproxy <-> pods level, however databases can be an important part of your system's performance. Of course, I mean systems that heavily use databases.

Most RDBMS are optimized for performance and have some built-in cache mechanisms. If you want to speed up the reading, then materialized views are the most popular method. Besides that you can also create a database clusters with at least two instances: primary (read/write) and secondary (read).

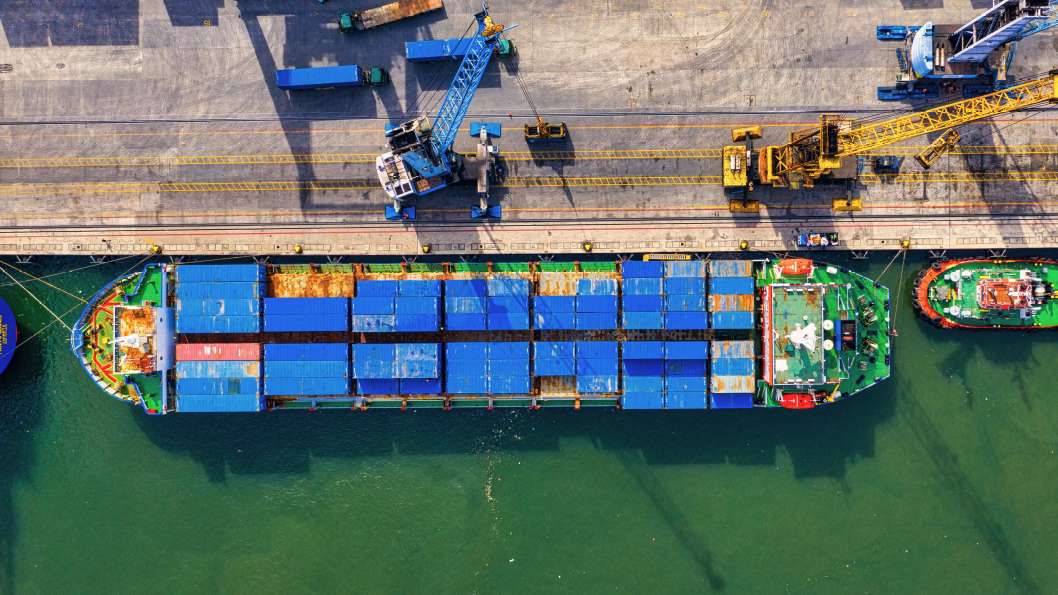

You can even create an efficient file serving system that uses the database as the main data store in the database. Yes db, not on the S3. This is possible because the performance of CDN-like services does not depend on the efficiency of access to the source file, but the number of edge servers, bandwidth, optimization of routing and so on.

Should I use the cache on each layer?

As always it depends. The only advice is to avoid premature optimization. Better to keep an eye on your systems and optimize step by step. Sometimes simple changes will make your system more resistant to unexpected peaks.

When I say to watch your systems, I mean constant monitoring with automatic alerts. Sometimes in a few hours you can lose a large sum of dollars because you forgot to set up Cache-Control on CloudFront. Yes, it may happen.

Summary

While adding new layer of cache makes you smile, it also generates some new challenges for you. It's about cache invalidation. There is no one solution to this, but you must remember to build some additional logic to achieve that (events, checksum, etc.). To cheer you up, CDN service providers such as Akamai or CloudFront allow you to invalidate the cache in seconds or minutes (we're talking about a number of several hundred thousand servers).